A/B Testing Emails is the key to unlocking even higher returns by systematically optimizing campaigns to identify the most effective subject lines, content, and send times that drive maximum engagement and conversions.

Yet, launching email campaigns without proper testing is like navigating without a compass. This is precisely why A/B testing your emails becomes essential for achieving measurable success.

A/B testing your emails is the process of comparing two versions of an email campaign to determine which performs better. By systematically testing different elements, you can dramatically improve your email open rates, click-through rates, and conversion rates.

In this comprehensive guide, we’ll walk you through everything you need to know about email A/B testing, from basic concepts to advanced strategies that will transform your email marketing performance.

Table of Contents

ToggleWhat is A/B Testing Emails?

Email A/B testing (also known as email split testing) is a scientific method where you create two slightly different versions of the same email and send them to different segments of your audience. The version that performs better becomes your “winner” and gets sent to the remaining subscribers.

Key Components of Email A/B Testing:

- Version A (Control): Your original email design or content

- Version B (Variation): The modified version with one changed element

- Test Group: A subset of your email list (typically 10-20%)

- Performance indicators: Quantifiable data points such as message open percentages or link engagement rates

- Statistical Significance: Ensuring your results are reliable and not due to chance

The Critical Role of Split Testing in Email Campaign Performance

1. Data-Driven Decision Making

Rather than relying on assumptions, systematic email testing delivers definitive data about what resonates with your subscribers and drives meaningful engagement. This eliminates costly assumptions and focuses your efforts on proven strategies.

2. Improved Email Campaign Performance

Regular email campaign optimization can lead to:

- 20-50% increases in open rates

- 15-30% improvements in click-through rates

- 10-25% boosts in conversion rates

- Enhanced email deliverability

3. Better Understanding of Your Audience

Email testing methodology reveals valuable insights about subscriber behavior, preferences, and engagement patterns that inform your broader marketing strategy.

4. Competitive Advantage

Surprisingly, 39% of businesses still don’t test their emails regularly, giving you a significant edge over competitors who rely on outdated practices.

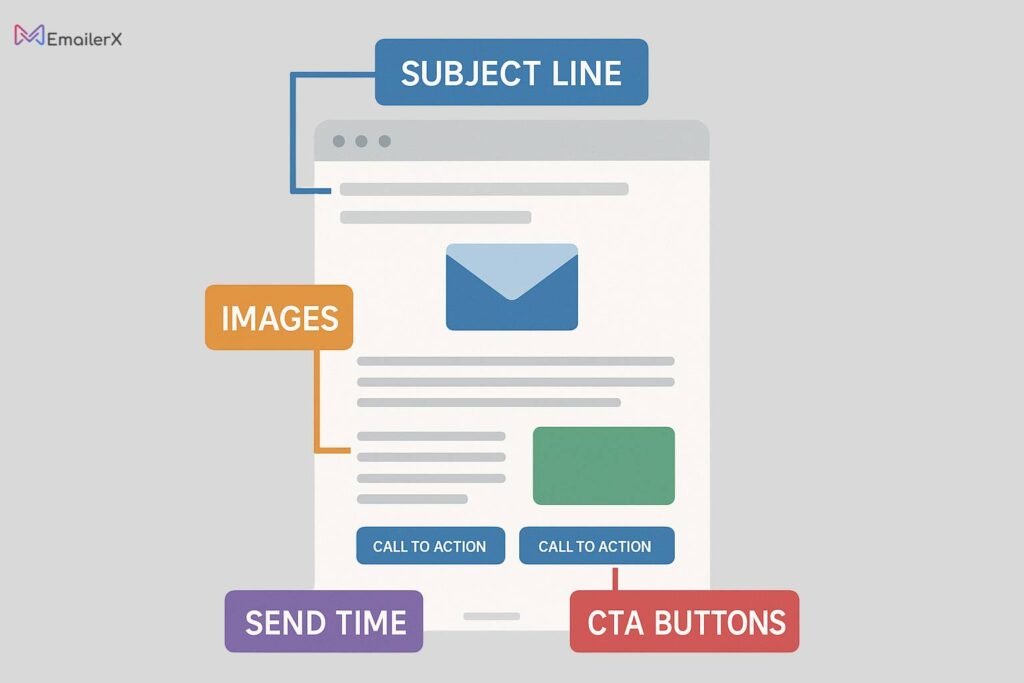

Essential Elements to Test in Your Email Campaigns

Subject Line A/B Testing: The Gateway to Success

The subject line serves as your email’s initial touchpoint with subscribers, making subject line experimentation a powerful method to significantly boost your message open rates.

Elements to Test:

- Length

- Personalization

- Emoji usage

- Question vs. statement format

- Urgency indicators

Email Design Testing: Visual Impact Matters

Email design testing focuses on the visual elements that influence engagement:

- Layout: Single column vs. multi-column designs

- Images: Hero images vs. product galleries

- Colors: Brand colors vs. high-contrast alternatives

- Typography: Font sizes and styles

- White space: Minimal vs. generous spacing

Call to Action Testing: Driving Conversions

Your CTA is where conversions happen. Test these elements:

- Button text: “Shop Now” vs. “Get Started” vs. “Learn More”

- Button color: Brand colors vs. contrasting colors

- Button size: Large vs. medium buttons

- CTA placement: Above vs. below the fold

- Number of CTAs: Single vs. multiple call-to-actions

Email Personalization Testing: Making It Personal

Email personalization testing goes beyond just using first names:

- Dynamic content: Location-based offers

- Behavioral triggers: Based on past purchases

- Segmentation: New vs. returning customers

- Content relevance: Industry-specific messaging

Email Send Time Testing: Timing is Everything

Delivery timing experiments reveal the optimal periods when your subscribers demonstrate peak engagement and responsiveness:

- Day of week: Tuesday vs. Thursday vs. weekend

- Time of day: Morning vs. afternoon vs. evening

- Frequency: Weekly vs. bi-weekly campaigns

- Time zones: Localized send times vs. single time zone

Step-by-Step Guide to A/B Testing Your Emails

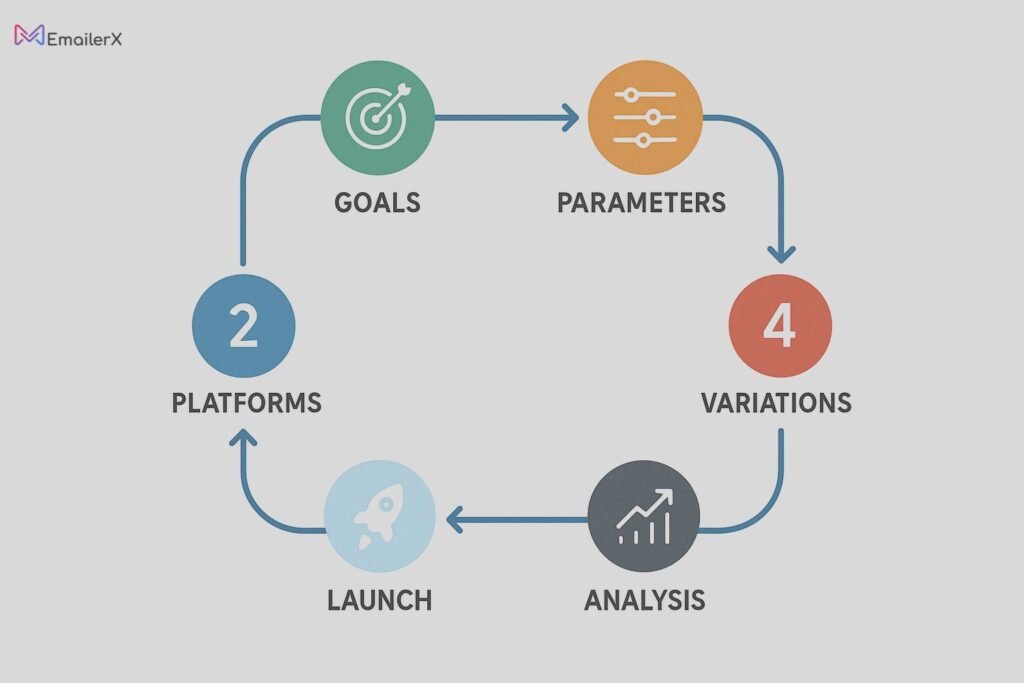

Step 1: Define Your Testing Goals

Before starting any test, establish clear objectives:

- Increase open rates: Focus on subject lines and sender names

- Boost click-through rates: Test content, design, and CTAs

- Improve conversions: Test landing page alignment and offers

- Enhance deliverability: Test send times and sender reputation

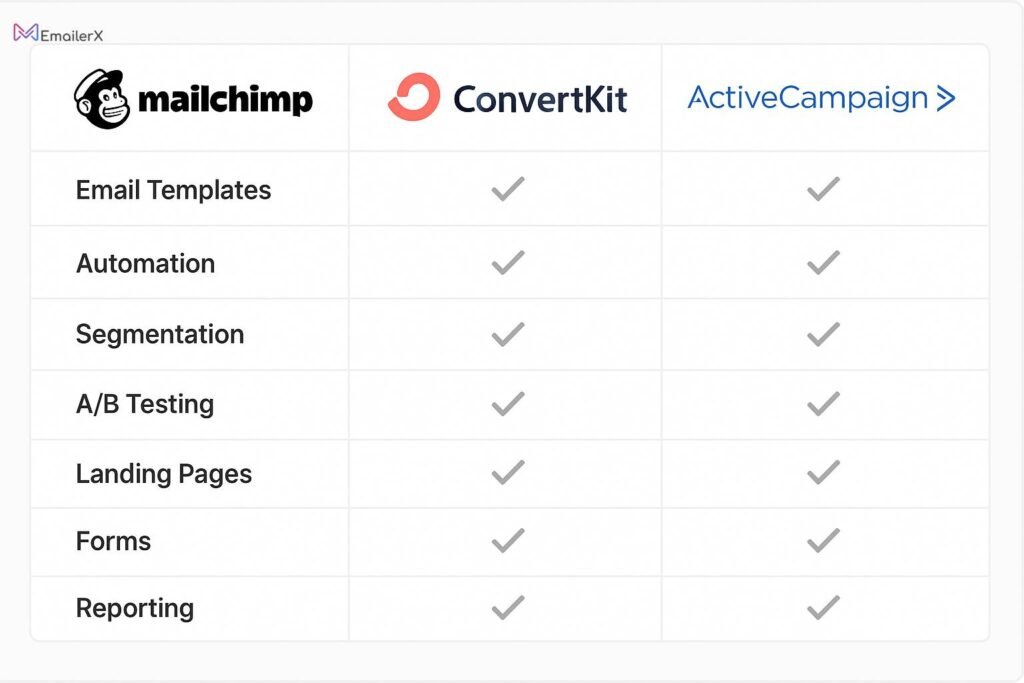

Step 2: Choose Your Testing Platform

Select an email marketing testing tool that offers robust A/B testing features:

Recommended Platforms:

- Mailchimp: User-friendly with built-in A/B testing

- ConvertKit: Advanced automation and testing features

- ActiveCampaign: Comprehensive testing and analytics

- Campaign Monitor: Professional-grade testing tools

Step 3: Set Up Your Test Parameters

Statistical Significance Requirements:

- Sample size: Minimum 1,000 subscribers per variation

- Test duration: Run for at least 24-48 hours

- Confidence level: Aim for 95% statistical significance

- Test split: 10-20% for testing, remainder gets winning version

Step 4: Create Your Variations

Best Practices:

- Change only ONE element at a time

- Make meaningful differences (not subtle tweaks)

- Ensure both versions align with your brand

- Test contrasting approaches, not similar ones

Step 5: Launch and Monitor Your Test

- Send both versions simultaneously

- Avoid making changes mid-test

- Monitor for deliverability issues

- Track your chosen metrics consistently

Step 6: Analyze Results and Implement

- Wait for statistical significance before concluding

- Look beyond surface metrics to understand “why”

- Document findings for future reference

- Apply learnings to subsequent campaigns

Advanced Email Testing Strategies

Email Automation A/B Testing

Email automation A/B testing involves testing your triggered email sequences:

- Welcome series: Test different email sequences and timing

- Abandoned cart emails: Test urgency vs. helpful content

- Re-engagement campaigns: Test different win-back approaches

- Birthday emails: Test personal vs. promotional content

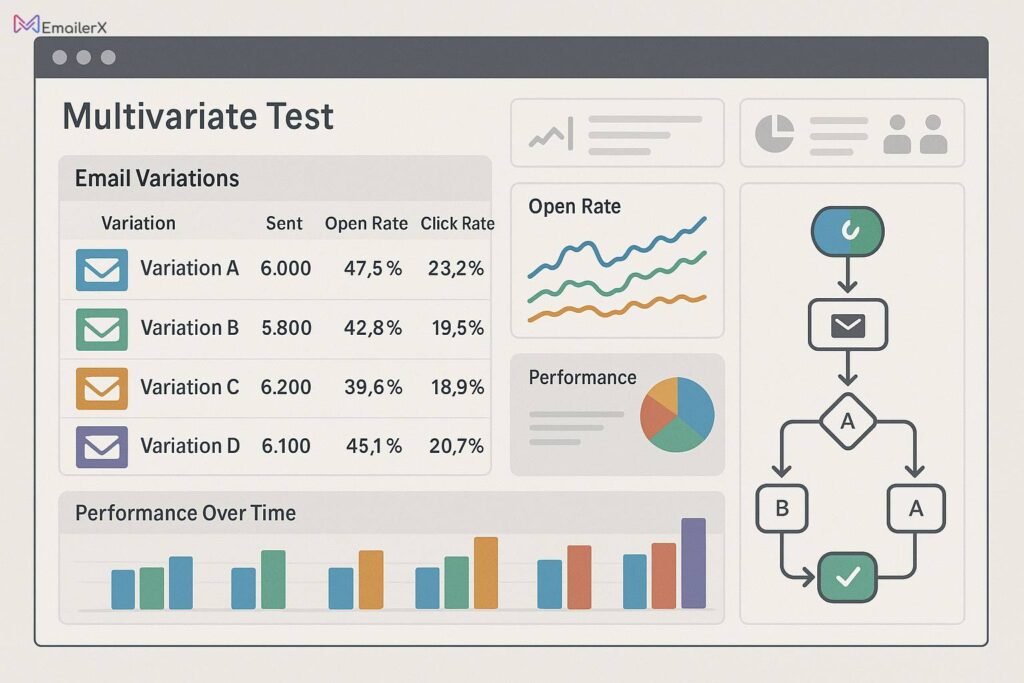

Multivariate Email Testing

Multivariate email testing allows you to test multiple elements simultaneously:

- Test combinations of subject lines, images, and CTAs

- Requires larger sample sizes (10,000+ subscribers)

- More complex analysis but deeper insights

- Best for high-volume senders with diverse audiences

Cold Email A/B Testing and Sales Email Testing Strategies

For B2B email A/B testing and lead generation email testing:

Elements to Test:

- Opening lines: Personal vs. professional approaches

- Value propositions: Feature-focused vs. benefit-focused

- Email length: Short vs. detailed emails

- Social proof: Testimonials vs. case studies vs. logos

- Follow-up timing: Immediate vs. spaced sequences

Tools for Cold Email Testing:

- Outreach.io: Advanced sales email testing

- Sales Loft: Comprehensive sales engagement platform

- Reply.io: Automated cold email sequences with testing

Email Deliverability Testing

Email deliverability testing ensures your emails reach the inbox:

- Sender reputation monitoring: Use tools like Sender Score

- Email client compatibility: Test across Gmail, Outlook, Apple Mail

- Spam filter testing: Use tools like Mail Tester

- Authentication setup: Implement SPF, DKIM, and DMARC

Tools and Software for Email A/B Testing Success

All-in-One Email Marketing Platforms

- Mailchimp – Best for beginners

- Built-in A/B testing

- Intuitive interface

- Comprehensive analytics

- Free plan available

- ConvertKit – Best for creators

- Advanced automation testing

- Subscriber tagging and segmentation

- Visual automation builder

- 14-day free trial

- ActiveCampaign – Best for advanced users

- Multivariate testing capabilities

- Predictive analytics

- CRM integration

- Machine learning optimization

Specialized Testing Tools

- Litmus – Email testing and analytics

- Email preview across devices

- Spam filter testing

- Advanced analytics

- Email optimization insights

- Email on Acid – Email testing platform

- Email client testing

- Accessibility testing

- Campaign optimization

- Deliverability monitoring

Analytics and Optimization Tools

- Google Analytics – Free website analytics

- Email campaign tracking

- Conversion attribution

- Audience insights

- Goal tracking

- Optimizely – A/B testing platform

- Statistical significance calculator

- Advanced testing features

- Integration capabilities

- Enterprise-grade analytics

Common A/B Testing Mistakes to Avoid

1. Testing Too Many Elements at Once

Stick to one variable per test to ensure clear results.

2. Ending Tests Too Early

Wait for statistical significance, typically requiring 95% confidence levels.

3. Using Insufficient Sample Sizes

Small sample sizes lead to unreliable results. Use sample size calculators.

4. Ignoring Segment Differences

Different audience segments may respond differently to the same test.

5. Not Testing Regularly

Email campaign performance changes over time. Test continuously.

6. Focusing Only on Open Rates

Consider the entire funnel: opens → clicks → conversions → revenue.

Measuring Success: Key Email Engagement Metrics

Primary Metrics

Email Open Rates

Industry average: 20-25%

Good performance: 25-30%

Excellent performance: 30%+

Email Click-Through Rates

Industry average: 2-5%

Good performance: 5-10%

Excellent performance: 10%+

Email Conversion Rates

Industry average: 1-3%

Good performance: 3-5%

Excellent performance: 5%+

Secondary Metrics

- Click-to-open rate (CTOR): Clicks divided by opens

- Revenue per email: Total revenue divided by emails sent

- List growth rate: New subscribers minus unsubscribes

- Forward rate: How often emails are shared

- Unsubscribe rate: Should remain below 2%

Frequently Asked Questions

How long should I run an A/B test for my emails?

Run tests for at least 24-48 hours to account for different checking patterns. For weekly newsletters, consider running for a full week to capture various subscriber behaviors.

What sample size do I need for reliable email A/B testing?

Aim for at least 1,000 subscribers per variation (2,000 total minimum). Use online sample size calculators to determine the exact number based on your expected improvement and confidence level.

Can I test multiple elements in one email campaign?

While possible (multivariate testing), it’s recommended to test one element at a time, especially for beginners. This makes it easier to identify which change drove the results.

How often should I A/B test my emails?

Test every major campaign and continuously optimize your automated sequences. High-volume senders should aim to test at least 2-3 elements per month.

What’s the difference between A/B testing and multivariate testing?

A/B testing compares two versions with one changed element. Multivariate testing examines multiple elements simultaneously, requiring larger sample sizes but providing deeper insights into element interactions.

Should I test the same element multiple times?

Yes! Winning variations can become new baselines for further testing. Email preferences change over time, so re-test successful elements periodically.

How do I know if my test results are statistically significant?

Use statistical significance calculators or rely on your email platform’s built-in tools. Look for 95% confidence levels and sufficient sample sizes before declaring a winner.

What should I do if my A/B test shows no clear winner?

No significant difference is still valuable data! It might indicate that the tested element isn’t impactful for your audience, or you might need to test more dramatic variations.